There will be two types of nodes in a Hadoop cluster – NameNode and DataNode. If you had installed Hadoop in a single machine, you could have installed both of them in a single computer, but in a multi-node cluster they are usually on different machines. In our cluster, we will have one name node and multiple data nodes. Install Hadoop on Multi Node Cluster: Prerequisite. All the three machines have latest Ubuntu 64-bit OS installed. At the of writing this post, Ubuntu 14.04 is the latest version available.

- Setup-hadoop-multi-node-cluster-ubuntu

- Install Hadoop Multi Node Cluster On Ubuntu 18.04

- Hadoop Cluster Install

- Install Hadoop Multi Node Cluster Ubuntu Command

- Setup Hadoop Cluster

Hadoop 2.6.4 fully distributed mode installation on ubuntu 14.04

Hadoop is an Apache open source framework written in java that allows distributed processing of large datasets across clusters of computers using simple programming models.

The Hadoop framework application works in an environment that provides distributed storage and computation across clusters of computers. Hadoop is designed to scale up from single server to thousands of machines, each offering local computation and storage.

Pre Requirements

1) A machine with Ubuntu 14.04 LTS operating system installed.

2) Apache Hadoop 2.6.4 Software (Download Here)

Fully Distributed Mode (Multi Node Cluster)

This post descibes how to install and configure Hadoop clusters ranging from a few nodes to extremely large clusters. To play with Hadoop, you may first want to install it on a single machine (see Single Node Setup).

On All machines - (HadoopMaster, HadoopSlave1, HadoopSlave2)

Setup-hadoop-multi-node-cluster-ubuntu

Step 1 -Update. Open a terminal (CTRL + ALT + T) and type the following sudo command. It is advisable to run this before installing any package, and necessary to run it to install the latest updates, even if you have not added or removed any Software Sources.

Step 2 -Installing Java 7.

Step 3 -Install open-ssh server. It is a cryptographic network protocol for operating network services securely over an unsecured network. The best known example application is for remote login to computer systems by users.

Step 4 -Edit /etc/hosts file.

/etc/hosts file. Add all machines IP address and hostname. Save and close.

Step 5 -Create a Group. We will create a group, configure the group sudo permissions and then add the user to the group. Here 'hadoop' is a group name and 'hduser' is a user of the group.

Step 6 -Configure the sudo permissions for 'hduser'.

Since by default ubuntu text editor is nano we will need to use CTRL + O to edit.

Add the permissions to sudoers.

Use CTRL + X keyboard shortcut to exit out. Enter Y to save the file.

Step 7 -Creating hadoop directory.

Step 8 -Change the ownership and permissions of the directory /usr/local/hadoop. Here 'hduser' is an Ubuntu username.

Step 9 -Creating /app/hadoop/tmp directory.

Step 10 -Change the ownership and permissions of the directory /app/hadoop/tmp. Here 'hduser' is an Ubuntu username.

Install Hadoop Multi Node Cluster On Ubuntu 18.04

Step 11 - Switch User, is used by a computer user to execute commands with the privileges of another user account.

Step 12 -Generating a new SSH public and private key pair on your local computer is the first step towards authenticating with a remote server without a password. Unless there is a good reason not to, you should always authenticate using SSH keys.

Step 13 -Now you can add the public key to the authorized_keys

Step 14 -Adding hostname to list of known hosts. A quick way of making sure that 'hostname' is added to the list of known hosts so that a script execution doesn't get interrupted by a question about trusting computer's authenticity.

Only on HadoopMaster Machine

Step 15 - Switch User, is used by a computer user to execute commands with the privileges of another user account.

Step 16 -ssh-copy-id is a small script which copy your ssh public-key to a remote host; appending it to your remote authorized_keys.

Step 17 -ssh is a program for logging into a remote machine and for executing commands on a remote machine. Check remote login works or not.

Step 18 -Exit from remote login.

Same steps 16, 17 and 18 for other machines (HadoopSalve2).

Step 19 -Change the directory to /home/hduser/Desktop , In my case the downloaded hadoop-2.6.4.tar.gz file is in /home/hduser/Desktop folder. For you it might be in /downloads folder check it.

Step 20 -Untar the hadoop-2.6.4.tar.gz file.

Step 21 -Move the contents of hadoop-2.6.4 folder to /usr/local/hadoop

Step 22 -Edit $HOME/.bashrc file by adding the java and hadoop path.

$HOME/.bashrc file. Add the following lines

Step 23 -Reload your changed $HOME/.bashrc settings

Step 24 -Change the directory to /usr/local/hadoop/etc/hadoop

Step 25 -Edit hadoop-env.sh file.

Step 26 -Add the below lines to hadoop-env.sh file. Save and Close.

Step 27 -Edit core-site.xml file.

Step 28 -Add the below lines to core-site.xml file. Save and Close.

Step 29 -Edit hdfs-site.xml file.

Step 30 -Add the below lines to hdfs-site.xml file. Save and Close.

Step 31 -Edit yarn-site.xml file.

Step 32 -Add the below lines to yarn-site.xml file. Save and Close.

Step 33 -Edit mapred-site.xml file.

Step 34 -Add the below lines to mapred-site.xml file. Save and Close.

Step 35 -Edit slaves file.

Step 36 -Add the below line to slaves file. Save and Close.

Step 37 -Secure copy or SCP is a means of securely transferring computer files between a local host and a remote host or between two remote hosts. Here we are transferring configured hadoop files from master to slave nodes.

Step 38 - Here we are transferring configured .bashrc file from master to slave nodes.

Step 39 -Change the directory to /usr/local/hadoop/sbin

Step 40 -Format the datanode.

Step 41 -Start NameNode daemon and DataNode daemon.

Step 42 -Start yarn daemons.

OR

Instead of steps 41 and 42 you can use below command. It is deprecated now.

Step 43 -The JPS (Java Virtual Machine Process Status Tool) tool is limited to reporting information on JVMs for which it has the access permissions.

Only on slave machines - (HadoopSlave1 and HadoopSlave2)

Only on HadoopMaster Machine

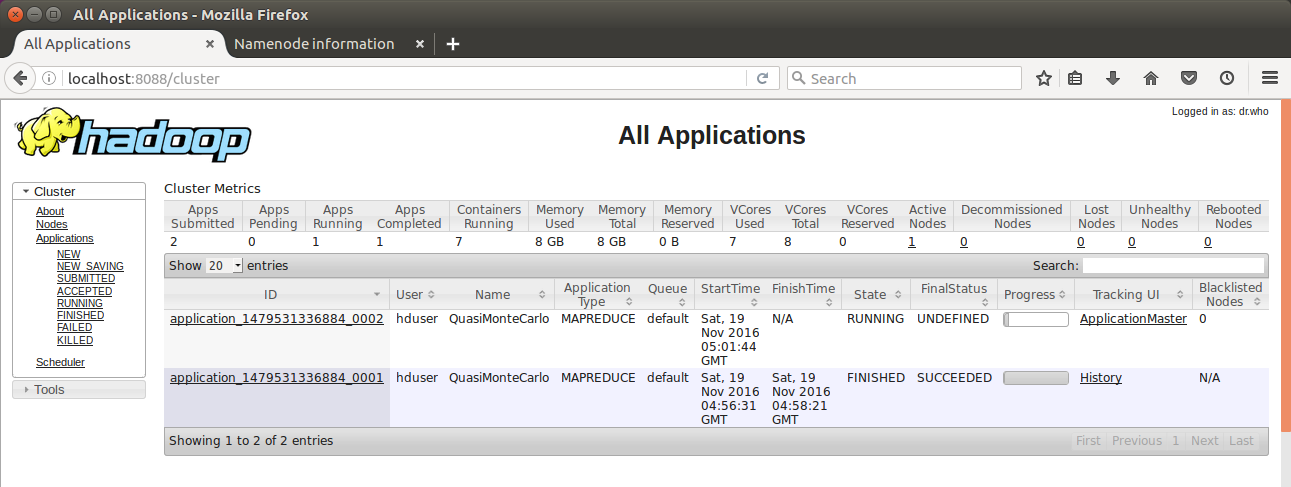

Once the Hadoop cluster is up and running check the web-ui of the components as described below

NameNode Browse the web interface for the NameNode; by default it is available at

ResourceManager Browse the web interface for the ResourceManager; by default it is available at

Step 44 - Make the HDFS directories required to execute MapReduce jobs.

Step 45 -Copy the input files into the distributed filesystem.

Step 46 -Run some of the examples provided.

Step 47 -Examine the output files.

Step 48 -Stop NameNode daemon and DataNode daemon.

Step 49 -Stop Yarn daemons.

OR

Instead of steps 48 and 49 you can use below command. It is deprecated now.

Please share this blog post and follow me for latest updates on

Labels : Hadoop Standalone Mode InstallationHadoop Pseudo Distributed Mode InstallationHadoop HDFS commands usageHadoop Commissioning and Decommissioning DataNodeHadoop WordCount Java ExampleHadoop Mapper/Reducer Java ExampleHadoop Combiner Java ExampleHadoop Partitioner Java ExampleHDFS operations using JavaHadoop Distributed Cache Java Example

Hadoop Cluster Install

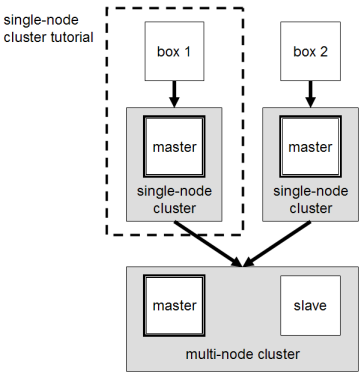

--D. Thiebaut (talk) 14:35, 22 June 2013 (EDT)

|

This tutorial is really a collection of recipes gleaned from the Web and put together to form a record of how a cluster of virtual servers located on the same physical machine was put together to create a Hadoop Cluster for a classroom environment. The information on this Web page is provided for informational purpose only, and no implied warranty is associated with it. These steps will help you setup a virtual cluster to the best of our knowledge, but some steps may not work as illustrated here for you. In such cases, please take the time to send an email to dthiebaut@smith.edu to help maintain the quality of the information provided. Thanks!

Here are the specs of our main machine

- ASUS M5A97 R2.0 AM3+ AMD 970 SATA 6Gb/s USB 3.0 ATX AMD Motherboard ($84.99)

- Corsair Vengeance 16GB (2x8GB) DDR3 1600 MHz (PC3 12800) Desktop Memory (CMZ16GX3M2A1600C10) ($120.22)

- AMD FX-8150 8-Core Black Edition Processor Socket AM3+ FD8150FRGUBOX ($175)

- 2x 1 TB-RAID 1 disks

- used Ctrl-F in POS to call RAID setup.

- setup 2 1TB drives as RAID-1

Ubuntu Desktop V13.04 is the main operating system of the computer. VirtualBox is used to setup the virtual hadoop servers. Since the main machine has an 8-core processor, we create a 6-host virtual cluster.

While installing Ubuntu, use Ubuntu Software Manager GUI and install following packages:

- Apache2+php (to eventually run virtualboxphp)

- VirtualBox

- emacs

- eclipse

- python 3.1

- ddclient (for dynamic hostname)

- open-ssh to access remotely

- setup keyboard ctrl/capslock swap

- setup 2 video displays

Bo Feng @ http://b-feng.blogspot.com/2013/02/create-virtual-ubuntu-cluster-on.html describes the setup of a virtual cluster as a series of simple (though high-level steps):

- Create a virtual machine

- Change the network to bridge mode

- Install Ubuntu Server OS

- Setup the hostname to something like 'node-01'

- Boot up the new virtual machine

- sudo rm -rf /etc/udev/rules.d/70-persistent-net.rules

- Clone the new virtual machine

- Initialize with a new MAC address

- Boot up the cloned virtual machine

- edit /etc/hostname

- change 'node-01' to 'node-02'

- edit /etc/hosts

- change 'node-01' to 'node-02'

- edit /etc/hostname

- Reboot the cloned virtual machine

- Redo step 4 to 6 until there are enough nodes

- Note: the ip address of each node should start with '192.168.' unless you don't have a router.

That's basically it, but we'll go through the fine details here.

Create Virtual Server

This description is based on http://serc.carleton.edu/csinparallel/vm_cluster_macalester.html.

- On the Ubuntu physical machine, download ubuntu-13.04-server-amd64.iso from Ubuntu repository

- Mount the image so that the virtual machines can install directly from it: Open windows manager, go to Downloads, right click (need 3-button mouse) on ubuntu-13.04-server-amd64.iso and pick open with archive-mounter

- Start VirtualBox (should be in Applications/Accessories)

- Create new virtual box with these attributes:

- Linux

- Ubuntu 64 bits

- 2 GB virtual RAM

- Create virtual hard disk, VDI

- dynamically allocated

- set the name to hadoop100, with 8.00 GB drive size

- install Ubuntu (pick language, keyboard, time zone, etc...)

- use a superuser name and password

- partition the disk using the guided feature. Select LVM

- install security updates automatically

- select the OpenSSH server (leave all others unselected)

- Start the Virtual Machine Hadoop100. It works!

Setup Network

- Using virtualBox, Stop hadoop100

- Right click on the machine and in the settings, go to Network

- select Enable Network Adapter

- select Bridged Adapter and pick eth0.

- Using virtualBox restart hadoop100

- from its terminal window, ping google.com and verify that site is reachable from the virtual server.

- (get the machine's ip address using ifconfig -a if necessary)

- Change server name by editing /etc/hostname and set to hadoop100

- similarly, edit /etc/hosts to contain line 127.0.1.1 hadoop100

Setup Dynamic-Host Address

On another computer, connect to dyndns and register hadoop100 with this system.

- on Hadoop0, install ddclient

- and enter the full address of the server (e.g. hadoop100.webhop.net)

- If you need to modify the information collected by the installation of ddclient, it can be found /etc/ddclient.conf

- wait a few minutes and verify that hadoop100 is now reachable via ssh from a remote computer.

Install Hadoop

- Install java

- Disable ipv6 (recommended by many users setting up Hadoop)

- and add these lines at the end:

- reboot hadoop100

- Now follow directives in Michael Noll's excellent tutorial for building a 1-node Hadoop cluster (cached copy]).

- create hduser user and hadoop group (already existed)

- create ssh password-less entry

- open /usr/local/hadoop/conf/hadoop-env.sh and set JAVA_HOME

- Download hadoop from hadoop repository

- setup user hduser by loging in a hduser

- and add

- edit /usr/local/hadoop/conf/hadoop-env.sh and set JAVA_HOME

- Edit /usr/local/hadoop/conf/core-site.xml and add

- Edit /usr/local/hadoop/conf/mapred-site.xml and add

- Edit /usr/local/hadoop/conf/hdfs-site.xml and add

- Format namenode. As user hduser enter the command:

- as super user run the following commands:

- back as hduser

All set!

Give the Right Permissions to New User for Hadoop

This is taken and adapted from http://amalgjose.wordpress.com/2013/02/09/setting-up-multiple-users-in-hadoop-clusters/

- as superuser, add new user mickey to Ubuntu

- login as hduser

- login as mickey

- change the default shell to bash

- emacs .bashrc and add these lines at the end:

- log out and back in as Mickey

Test

- create input directory and copy some random text files there.

- run hadoop wordcount job on the files in the new directory to test the setup.

- It should work correctly and create a list of words and their frequency of occurrence.

Clone and Initialize

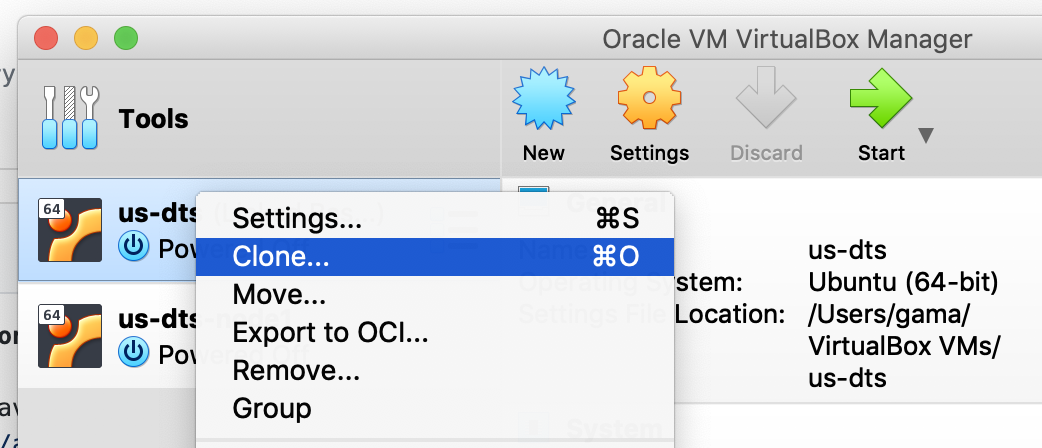

We've found that cloning a server is not always a reliable way to copy all the users and applications that have been added to the initial Ubuntu server. Instead exporting a copy of the first server as an instance and importing it back as a new virtual server works well.

This work is done on the physical server, using the VirtualBox Manager. We'll use hadoop100 as Master for cloning

- (deprecated)

Before closing down hadoop100 before cloning it, connect via ssh to it, and remove the file /etc/udev/rules.d/70-persistent-net.rules (it will be recreated automatically. No need to move it under a different name).

Not doing this will prevent the network interface from being brought up.

- Close hadoop100 and power it off.

- Click on the machine hadoop100 in VBox manager. Select Export Appliance in the File menu. Accept the default options and export it.

- In the File menu, click on Import Appliance, and call it hadoop101. Give it 2GB or RAM.

- Do not check box labeled 'Reinitialize the MAC address of all network cards'

- right click on new machine, pick settings and verify/adjust these quantities:

- System: select 2GB RAM

- Processor: 1CPU

- Network: bridged adapter

- start the newly cloned virtual machine (hadoop101)

- it may complain that it is not finding the network, in which case login as the super user (the same as for hadoop100) and do this:

- Run this command in the terminal:

ifconfig -a - Notice the first Ethernet adapter id. It should be 'eth?'

- edit the file at /etc/network/interface. Change the adapter (twice) from 'eth0' to the adapter id in the previous step

- Save the file

- reboot

- Run this command in the terminal:

- Reboot the virtual appliance if you have skipped the previous step, and connect to it in the terminal window provided by VirtualBox:

- replace hadoop100 by hadoop101 in several files:

- reboot to make the changes take effect:

- You should be able to remotely ssh to the new machine from another computer, now that ddclient is setup. You may have to wait a few minutes, though, for the dynamic host name to be updated on the Internet... 5 minutes or so?

Making the Clone run as a Hadoop 1-Node Cluster

Install Hadoop Multi Node Cluster Ubuntu Command

In this section we make sure the new virtual server can work as an 1-node Hadoop cluster, and we repeat the steps from above.

The clone should contain all the hadoop setup we did for hadoop100. We'll just clean it up a bit.

- run these commands in a terminal (you should be able to ssh to your new clone by now). We assume that the new clone is named hadoop101.

- The input folder should already exist in the hduser home directory, so we need to copy it again to the hadoop file-system:

- Now we should be able to run our wordcount test:

- Verify that we have a result file (feel free to check its contents):

- This verifies that our newly cloned server can run hadoop jobs as a 1-node cluster.

- Follow the excellent tutorial by Michael Noll on how to setup a 2-node cluster (cached copy]). Simpley use hadoop100 whenever Noll uses master and hadoop101 whenever he uses slave. The hadoop environment where files need to be modified is in /usr/local/hadoop, and NOT in /etc/hadoop.

- Very important: comment out the second line of /etc/hosts on the master as follows:

- as suggested in http://stackoverflow.com/questions/8872807/hadoop-datanodes-cannot-find-namenode, otherwise the slave cannot connect to the master that somehow binds to the wrong address/port when trying to listen for the slave, generating entries of the form INFO org.apache.hadoop.ipc.Client: Retrying connect to server: master/... in the log file of the slave...

- Cleanup the hadoop environment in bothhadoop100 and hadoop101:

Setup Hadoop Cluster

- On hadoop100 (the master), recreate a clean namenode:

- Start the hadoop system on hadoop100 (the master):

- Assuming that the input folder with dummy files is still in the hduser directory, copy it to the hadoop file system:

- Run the test:

- there should be an easy way to create script to backup the virtual servers automatically, say via cron jobs. The discussion here is a good place to start...

Comments are closed.